|

I am Hao GONG, former Expert in Autonomous Driving and Visual Perception Tech Lead at Enjoy Move Tech. Previously, I was Co-Founder and Algorithm Scientist at Landmark Vision Tech. Prior to this role, I was an Expert in ADAS/Auto Driving R&D at OFilm Group and a Research Scientist at Third Research Institute of Ministry of Public (TRIMP). I received my PhD from GIPSA Lab at Université Grenoble Alpes under the supervision of Prof. Michel DESVIGNES, where I specialize in combinatorial optimization based medical image processing and classification. Prior to that, I received my Bachelor's and Master's degrees in Automation and Pattern Recognition from School of Automation at Southeast University. |

|

News and Updates

[Mar 2024] Our paper Comparison of Methods in Skin Pigment Decomposition is on arXiv.

|

ResearchI am interested in machine/deep learning, computer vision and optimization, with applications to autonomous driving, intelligent traffic and medical image analysis. |

Journal Publications |

|

|

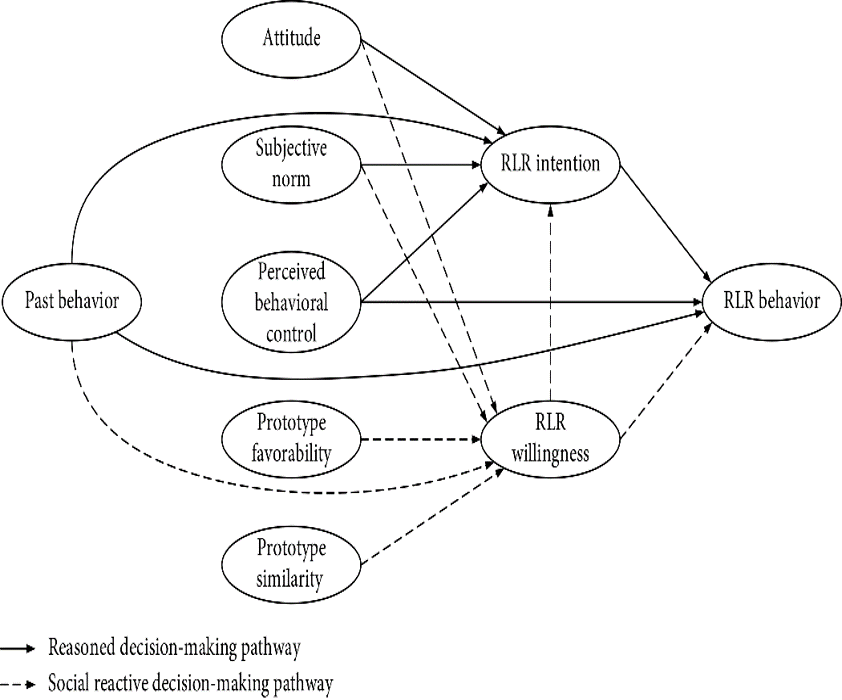

Understanding Electric Bikers’ Red-Light Running Behavior: Predictive Utility of Theory of Planned Behavior vs Prototype Willingness ModelTianpei Tang, Hua Wang, Xizhao Zhou, Hao Gong Journal of Advanced Transportation (JAT), 2020 paper / We aim to understand e-bikers’ RLR behavior based on structural equation modeling. Specifically, the predictive utility of the theory of planned behavior (TPB), prototype willingness model (PWM), and their combined form, TPB-PWM model, is used to analyze e-bikers’ RLR behavior, and a comparison analysis is made. |

Conference Proceedings |

|

|

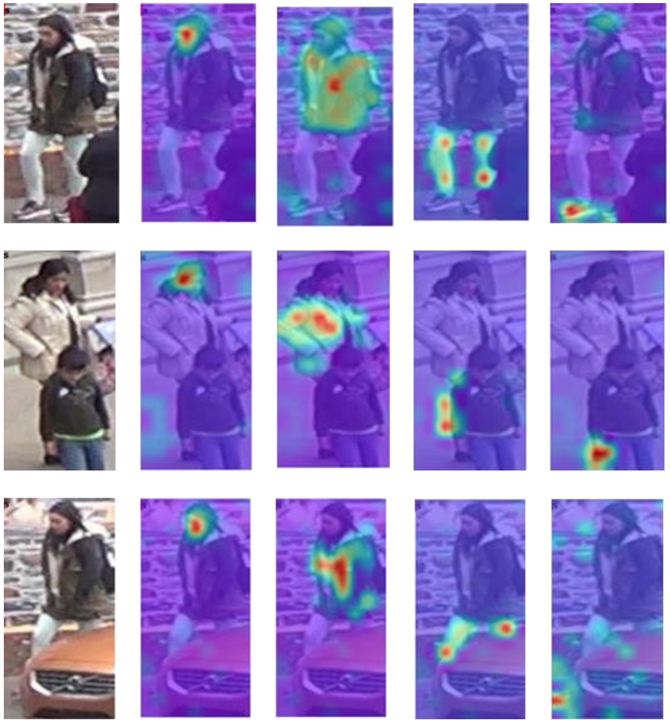

Pose Estimation and Occlusion Augmentation Based Vision Transformer for Occluded Person Re-IdentificationYilin Wei, Dan Niu, Hao Gong, Yichao Dong, Xisong Chen, Ziheng Xu Jiangsu Annual Conference on Automation (JACA), 2022 paper / We propose the Pose Estimation and Occlusion Augmentation Based Vision Transformer (POVT) which leverage Pose Estimation Guided Vision Transformer (PEGVT) and an Occlusion Generation Module (OGM) to extract discriminative partial features. |

|

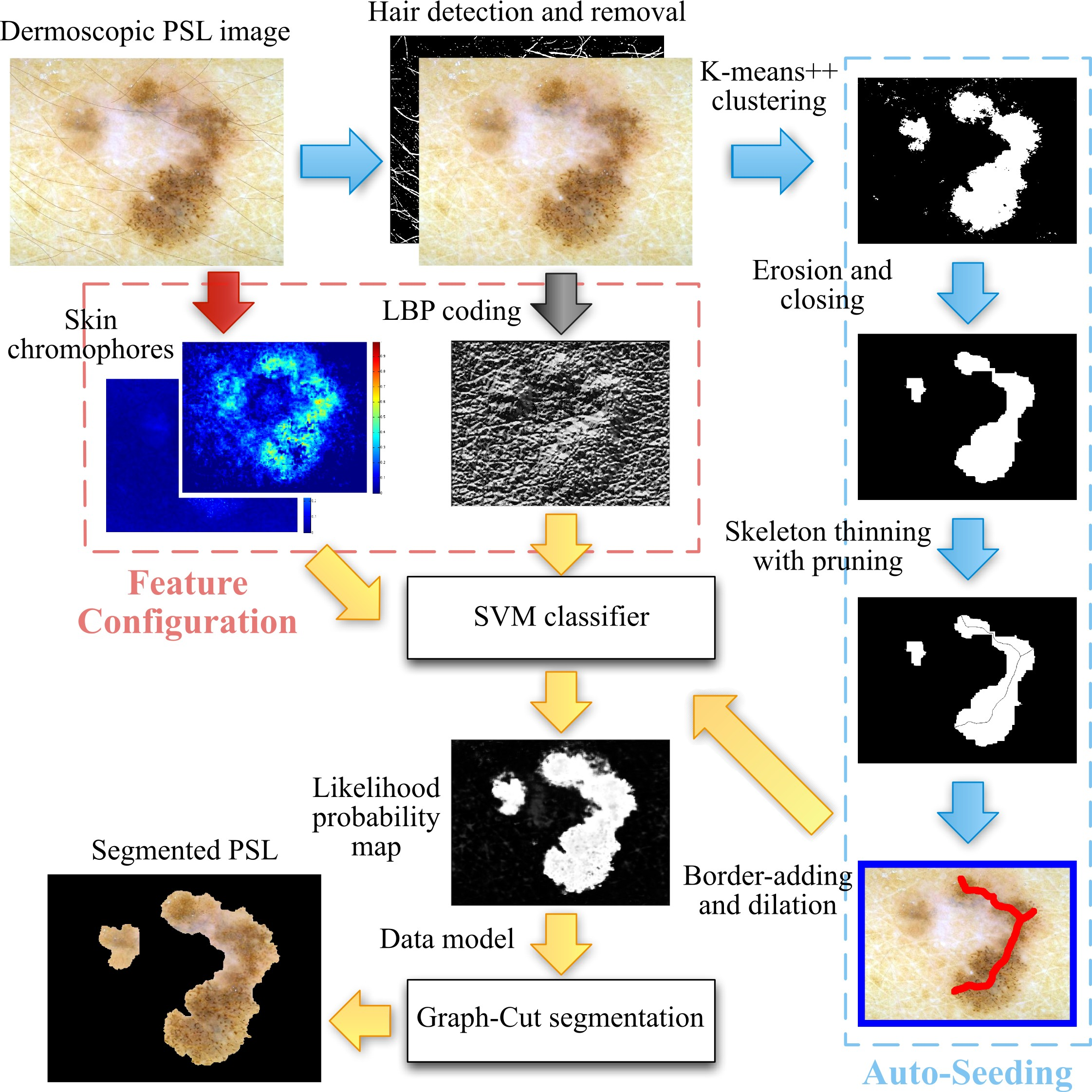

New Data Model for Graph-Cut Segmentation: Application to Automatic Melanoma DelineationRazmig Kéchichian*, Hao Gong*, Marinette Revenu, Olivier Lézoray, Michel Desvignes (* equal contribution) IEEE International Conference on Image Processing (ICIP), 2014 paper / We define a new data model for graph-cut image segmentation, according to probabilities learned by a classification process. Unlike traditional graph-cut methods, the data model takes into account not only color but also texture and shape information. |

|

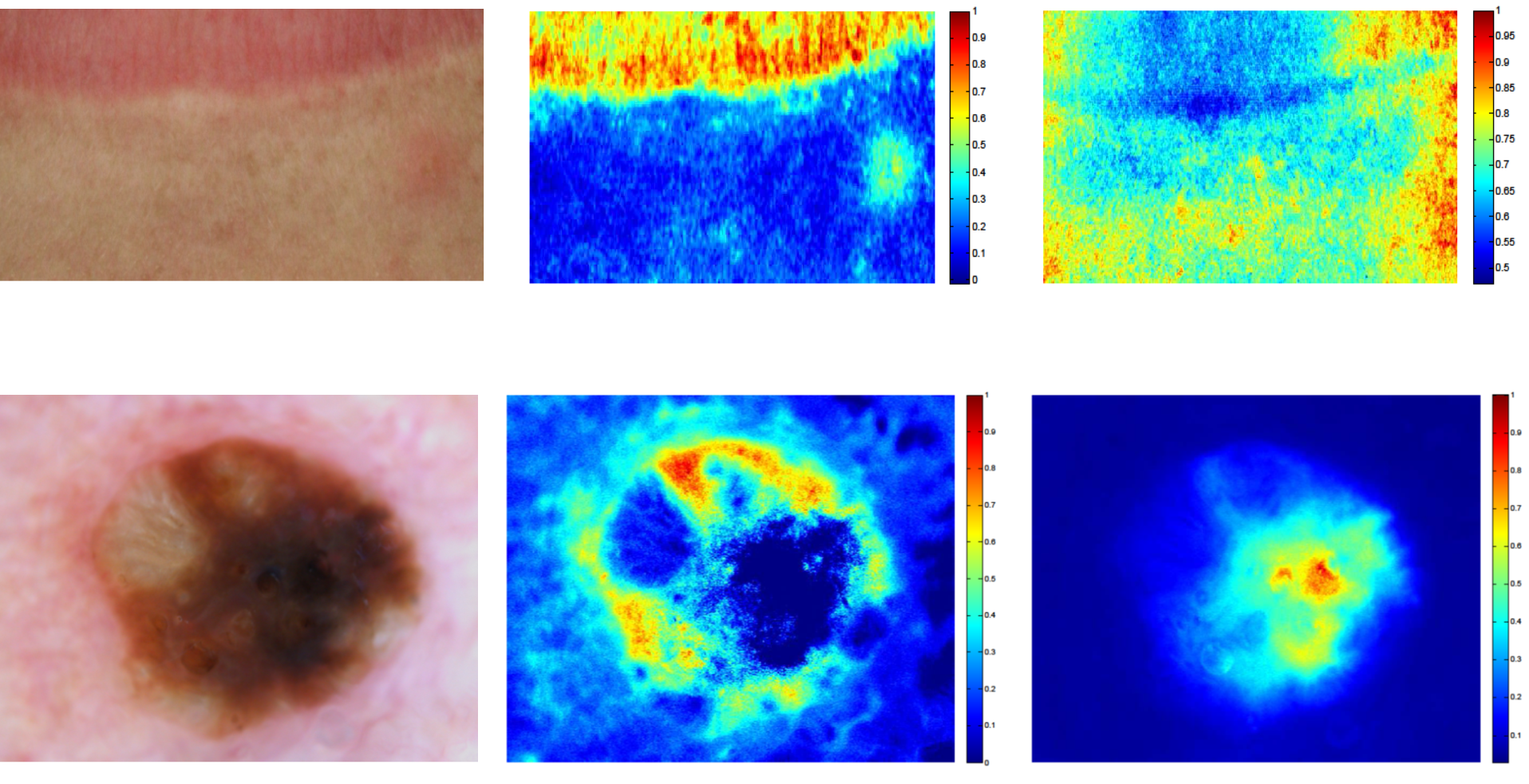

Quantification of Pigmentation in Human Skin ImagesHao Gong, Michel Desvignes IEEE International Conference on Image Processing (ICIP), 2012 paper / We show how our Beer-Lambert law based model-fitting method can be more accurate in quantification of skin hemoglobin and melanin. |

|

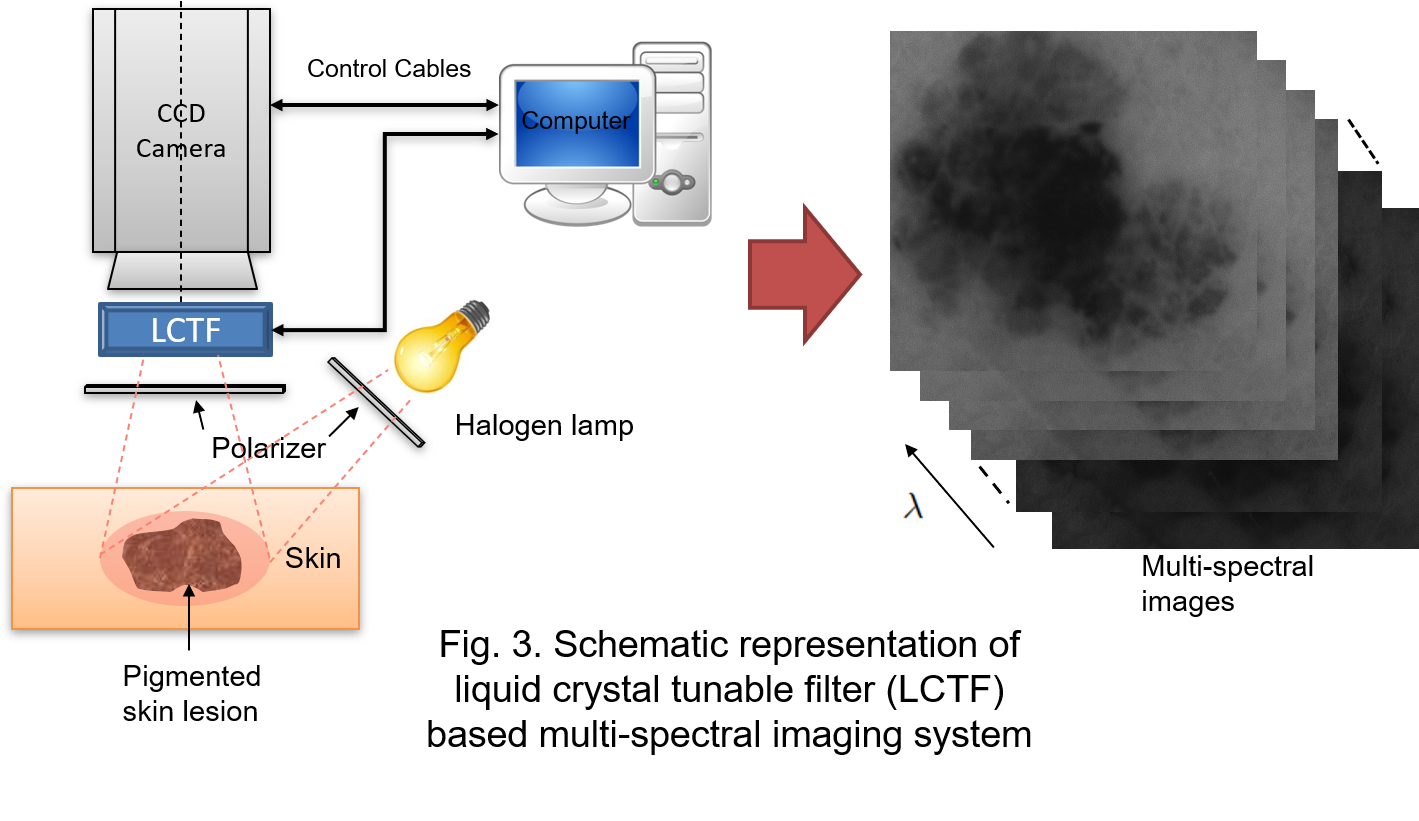

Skin Hemoglobin and Melanin Quantification on Multi-Spectral ImagesHao Gong, Michel Desvignes IASTED International Conference on Imaging and Signal Processing in Health Care and Technology (ISPHT), 2012 paper / slides / We propose and compare two different approaches for quantification of skin hemoglobin and melanin on multi-spectral images. Quantitative evaluation through graph-cut segmentation on melanoma indicates that model-fitting method obtains more accurate quantification than NMF. |

Preprints |

|

|

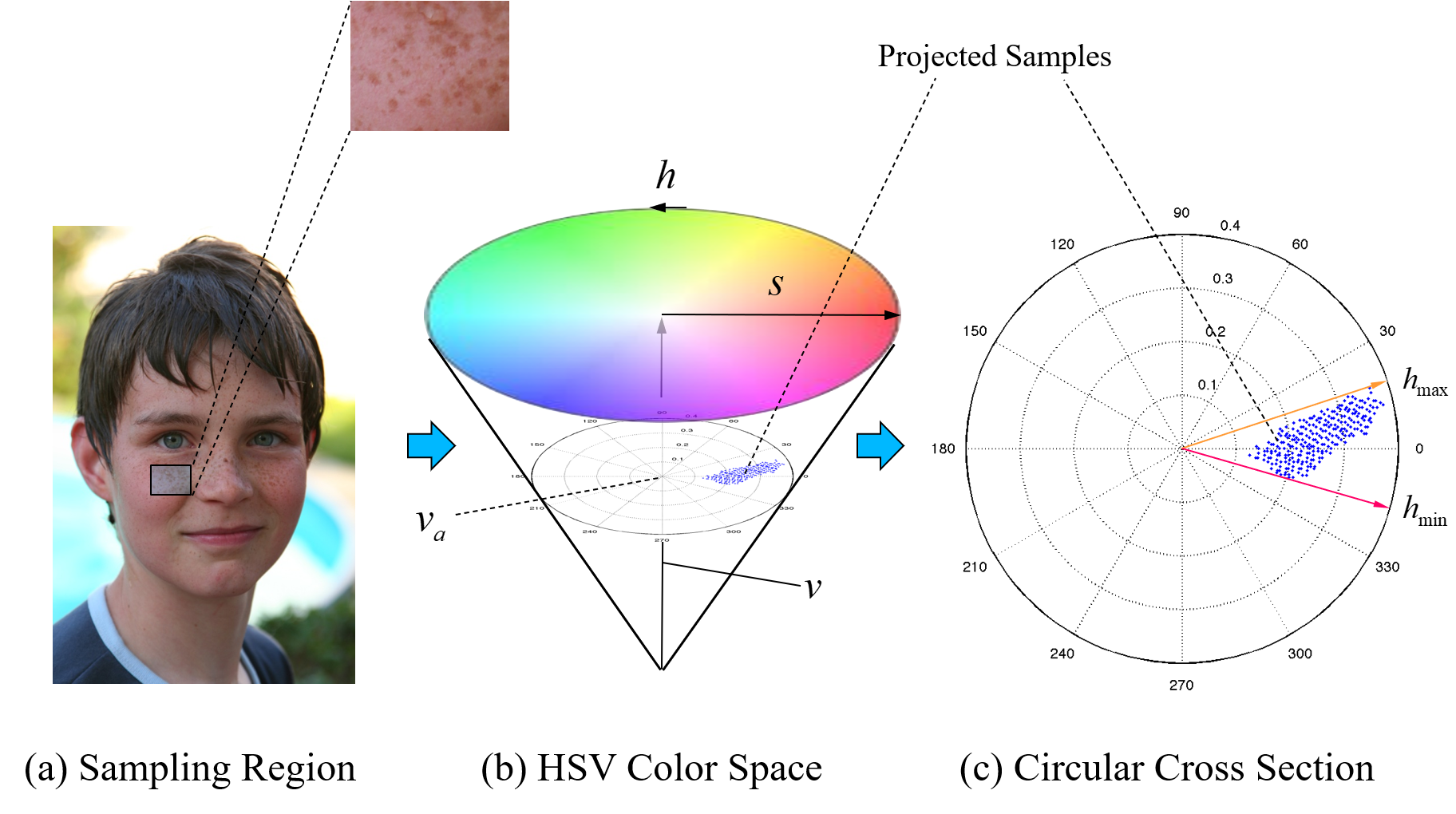

Comparison of Methods in Skin Pigment DecompositionHao Gong, Michel Desvignes arXiv:2404.00552, 2024 Various methods for skin pigment decomposition are reviewed comparatively and the performance of each method is evaluated both theoretically and experimentally. In addition, isometric feature mapping (Isomap) is introduced in order to improve the dimensionality reduction performance in context of skin pigment decomposition. |

|

|

Interactive Graph-Cut Segmentation Using Pixel-Wise PosteriorsHao Gong, Michel Desvignes arXiv, 2024 In this paper we investigate the potential of nonlinear posteriors within the graph-cut optimization framework. We propose to reformulate the data energy in the energy function considering not only a single pixel but also its neighborhood, not only color information but also texture features, and utilizing posterior class probability learnt by a classifier to enhance the discrimination between foreground and background, where the color information only is less discriminative. |

Thesis |

|

|

Segmentation d'Images Couleurs et Multispectrales de la PeauHao Gong Ph.D. Thesis, Université de Grenoble, 2013 paper / slides / Accurate border delineation of pigmented skin lesion (PSL) images is a vital first step in computer-aided diagnosis (CAD) of melanoma. This thesis details a global framework of automatic segmentation of melanoma, which comprises two main stages: automatic selection of “seeds” useful for graph cuts and the selection of discriminating features. This tool is compared favorably to classic graph-cut based segmentation methods in terms of accuracy and robustness. |

ProjectsBesides my academic work listed above, a sampling of my work in industry. |

Semantic Visual Mapping and Localization (Semantic Visual SLAM) in Project AVP (Automated Valet Parking)Enjoy Move Tech 2022-09-30 Led the development of a new SLAM technique to build semantic visual map for self-localization feature in AVP application, using inputs from CNN-based semantic detection of road markings and IMU-wheel encoder coupled odometry. As vehicle maneuvers, the map evolves in form of a dynamic semantic graph comprising semantic attributes and odometry. Graph optimization then periodically minimizes pose errors of semantic objects and egomotion, updating both local and global mapping. Once complete, vehicle re-localization can be effectively achieved in familiar environments, providing accurate pose estimations that guides vehicle navigation, path planning and control towards designated parking spots. This L4 autonomous driving feature capable of executing parking maneuvers up to 1000m with high precision in pose prediction (rotational error ≤ 1°, translational error ≤ 20cm). Sensor configuration: Ultrasonic Sensors (USS) x12, AVM Camera x4, F-Camera x1, IMU x1, and 4D MMW Radar x1. |

|

|

2.5D Multi-Target Multi-Camera Human Tracking and Re-IdentificationLandmark Vision Tech 2021-05-08 patent / Proposed a Pose Estimation and Occlusion Augmentation Based Vision Transformer (POVT) for significant improvement on real-world occluded person re-identification. The pipeline system comprises an anchor-free pedestrian detector (DarkNet53 backbone), a multi-object tracker using generic data association compatible with ReID feature similarity to handle occlusions and a homography-based perspective mapping to convert 2D pedestrian positions into real-world coordinates for trajectory plotting. This system enables inter-camera MOT and intra-camera ID assignment, optimized real-time performance across platforms via inferencing accelerators (TensorRT/ONNX/OpenVINO). |

|

Automatic Search, Localization and Stacking of 2D CrystalsLandmark Vision Tech 2020-03-10 The project aimed to develop a robotic system for automatically searching and stacking exfoliated 2D crystals to build van der Waals (vdW) heterostructures. Our primary contribution lied in the computer vision (CV) part of the system, which significantly reduced repetitive manual tasks and served as backbone of automation. It is capable of recognizing 2D crystals, locating and delineating their boundaries, and telling the system when to pick up during the stamping process. The CV algorithms comprised five components: blur detection based auto-focusing, machine learning based detection of Newton’s rings, MaskRCNN and transfer learning based 2D material classification and segmentation w.r.t identity (graphite/hBN) and layer thickness, dynamic matching of target material samples using edge features, and wavefront detection of Newton’s rings through contour extraction and tracking. Thanks to these sophisticated algorithms, the system can autonomously detect graphene/hBN flakes across varying layer thicknesses with a low error rate (<5%) and assemble them into vdW superlattices requiring minimal human intervention. |

FaceLiveNet: End-to-End Networks Combining Face Verification with Interactive Facial Expression-Based Liveness DetectionLandmark Vision Tech, co-op with L3i, Université of La Rochelle 2019-04-07 code / We proposed a holistic end-to-end deep networks which can perform face verification and liveness detection simultaneously. It is based on the Inception-ResNet structure, designed to have two main branches corresponding to face verification and facial expression recognition. Branch 1 extracts the embedded features for face verification, while Branch 2 calculates the probabilities of the facial expressions. The structures of them are almost same, which can be easier for transfer learning. The two branches were trained with different loss functions, class-wise triplet loss and softmax loss, respectively. Face detection was implemented by the Multi-Task CNN (MTCNN). |

|

|

Trained Parking (SAIC Motor P2P Project)Intelligent Vehicle and Auto Driving, OFilm 2017-11-02 A Long Distance Autonomous Parking Feature with Route Memory, a.k.a the “P2P (Point to Point) Valet Parking” following collaboration with SAIC Motor, achieves mapping distance up to 100m and centimeter-level localization accuracy. Inspired by ORB-SLAM, we proposed a multi-fisheye visual SLAM system tailored to vehicle trims and delivery standards. This L3 conditional automation supports autonomous parking via a one-off training process for SLAM mapping, requiring no in-vehicle human intervention in predefined paths and controlled settings. For safety, users must monitor the system status in real-time via a mobile app, maintaining activation and taking safety actions such as braking when necessary. Fisheye cameras with extended FOVs ensure accurate and robust pose estimation in confined spaces as underground parking. Despite challenges from lack of loop-closing and fisheye-induced radial distortions, we introduced special descriptors to enhance feature matching and integrated precise absolute scale information from our vehicle motion model. Validation through real-world ground truth and the “Multi-FoV” synthetic datasets confirmed centimeter-level accuracy in 3D landmark reconstruction. The system on Renesas RH850 MCU and TI TDA2x SoC, supports real-time operation at 15fps during the localization phase. Intergration of mapping environment evaluator and map quality evaluator enhanced practicality and deployability. |

|

Vehicle Kinematic Model, Penetrated AVM and Moving Object DetectionIntelligent Vehicle and Auto Driving, OFilm 2016-07-25 patent / Built a calibrated and optimized vehicle motion model based on Ackermann Steering and Levenberg-Marquardt algo., serving as base of ADAS features like Penetrated Around View Monitor (AVM) and Moving Object Detection (MOD). The former accordingly estimates ego-motion to extrapolate and stitch historical Bird’s Eye View (BEV) frames, filling gap from ego-vehicle occlusion for a complete “penetrated” AVM; the latter leverages epipolar geometric constraints to segment moving objects from static backgrounds while SfM, powered by feature-level tracking (KLT optical flow), object-level detection (meanshift clustering) and tracking (KF w/ Hungarian Algo.), with detection rate ≥ 95%, false alarm rate ≤ 1% and latency ≤ 65ms on TI Jacinto 5 upon delivery. Both facilitated the deployment of the APA (Automatic Parking Assist). |

Talks

From GPUs to Edge Computing Devices: On the Deployment of Visual AI Model InferenceGuest Lecture at Shanghai Zhenhua Heavy Industries Company Limited (ZPMC), 2022-01-17 slides /

An Overview of the Application of AI-Based Biometric Recognition Techniques in Financial SecurityGuest Lecture at Bank of Communications, Pudong Branch, Shanghai, 2019-05-23 slides /

Decompostion of Skin Color ImageAnnual Seminar of GdR ISIS (Groupement de Recherche, Information Signal Image viSion), Paris, 2011-10-12 slides / website / |

ServiceI have reviewed for ICIP2014, ACCV2022, ICASSP2023, ICASSP2024. |

Honors and Awards [2010] National Scholarship of China

|

|

|

|

© 2024 Hao GONG. All Rights Reserved.

|